1.2 Colab and Jupyter Notebooks¶

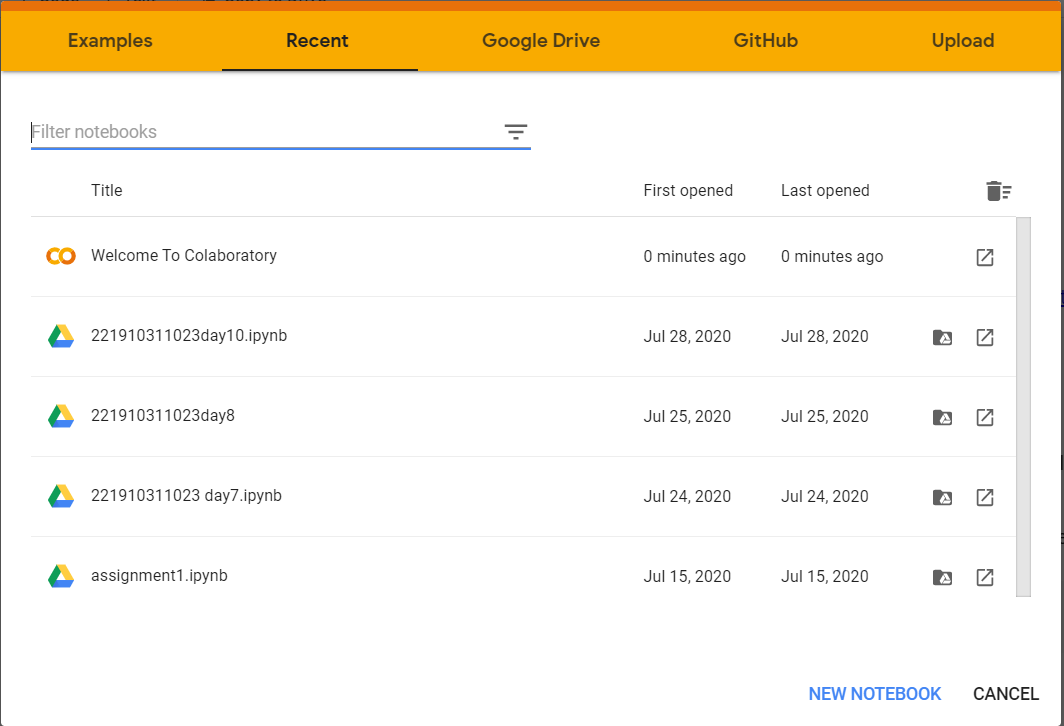

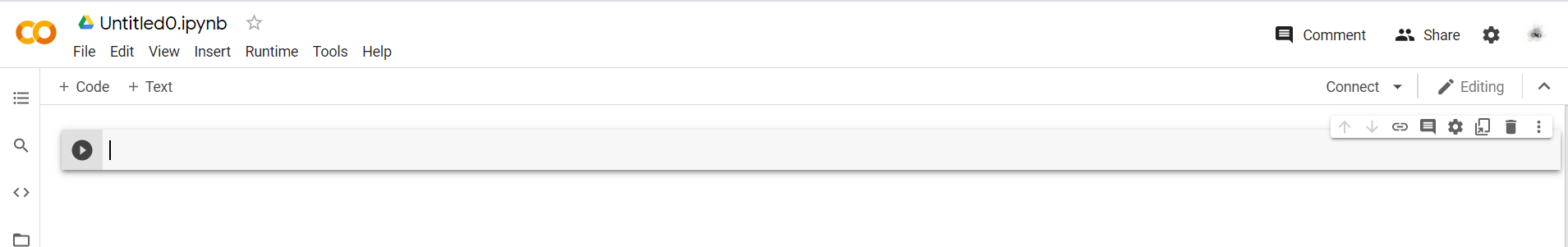

Colab notebook

In 2018, Google launched an amazing platform called ‘Google Colaboratory’ (commonly known as ‘Google Colab’ or just ‘Colab’). Colab is an online cloud based platform based on the Jupyter Notebook framework, designed mainly for use in ML and deep learning operations.

Tensorflow + Keras + Colaboratory = Deep Learning Made Easy

Colab offers a free CPU/GPU quota and a preconfigured virtual machine instance set up for to run Tensorflow and Keras libraries using a Jupyter notebook instance.

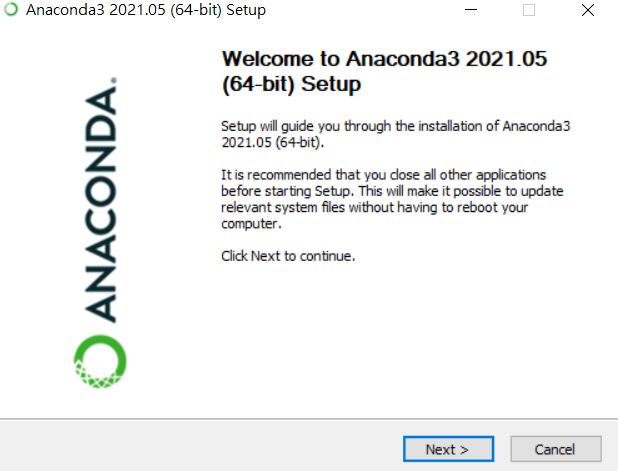

Jupyter notebook lets you write and execute Python code locally in your web browser. Jupyter notebooks make it very easy to tinker with code and execute it in bits and pieces; for this reason they are widely used in scientific computing.